Using Segmentation to Improve Strategy & Predictive Modelling

Improve the accuracy and insights generated from your predictive modelling by using segmentation first

We all want to improve the accuracy and insights generated from predictive modelling, and we all like to believe that consumer behaviour is predictable (ha!). The following is a simple philosophy that advocates better predictive models and a more actionable strategy, through segmenting first.

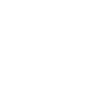

By separating consumer behaviour into causes that generate strategic insights, better actions can be obtained. The accuracy of predictive modelling will improve by applying a different model for each segment, rather than one model applied to the whole database. Segmentation makes the models more accurate and generates better insights that cause smarter strategies for each segment (see Figure 1 below).

FIGURE 1

Why segmentation is a strategic, not an analytic, process

First, be aware that segmentation is about strategy. Analytics is (the most fun!)part of the process. As mathematics is the handmaiden of science (according to Albert Einstein), analytics is the handmaiden of strategy. Analytics without strategy is like an adventure movie with no plot. There may be explosions and shootouts and car chases but without a story, it has no meaning.

The four Ps of strategic marketing:

- PARTITION: Is Segmentation. Homogeneous within and heterogeneous between.

- PROBE: Creating new variables, adding on third-party overlay data or marketing research. This fleshes out the segments.

- PRIORITISE: This step uses financial valuations (lifetime value, contribution margin, ROI, etc.) to focus strategy.

- POSITION: After the above, the four Ps of tactical marketing (product, price, promotion and place) are levied differently against each segment to extract the most value. Each segment requires a different strategy (which is why they are segments).

Note that segmentation is the first of the four Ps. The bottom line is that the more differentiated the segments are, the more actionable the strategy can be.

Knowing which algorithm to use

Those who have read my earlier works know I advocate latent class analysis as the state of the art in segmentation. K-means is probably LCA’s closest competitor, although SVM is catching up, mostly because it is free using R or python, etc. However, LCA offers superior performance for several reasons:

Latent class does not require the analyst to state the number of segments to find, unlike K-means. LCA tells the analyst the optimal number of segments.

- Latent class does not require the analyst to dictate which variables are used for segment definition, again unlike K-means. LCA tells the analyst which variables are significant.

In short, unlike K-means, there are no arbitrary decisions the analyst needs to make. The LCA process finds the optimal solution.

Latent class maximizes the probability of observing the scores seen on the segmenting variables, hypothesized to come from membership in a (latent) segment. That is, LCA is a probabilistic model.

K-means uses the square root of the Euclidean distance of each segmenting variable to define segment membership. K-means does not optimize anything; it is only a mathematical business rule.

Why does segmentation improve predictive modelling accuracy?

Segmentation will improve modelling accuracy because instead of one overall (average) model there will be a different model for each segment. The different granularities cause a smaller error.

It’s very possible (because it is a different model) to have different variables in each model. The example below illustrates just that. This also leads to additional insights (see Figure 2).

The simple answer is that with one model, the dependent variable is on average say 100, plus/minus 75. But with three models (one for each segment) the dependent variable becomes 50 plus/minus 25, 100 plus/minus 25 and 150 plus/minus 25. Meaning the accuracy will be much better.

FIGURE 2

Segmenting variables for model improvement

For segmenting, variables use causal, not resulting, variables. For example, if you are doing a demand model where units are the dependent variable, the segmentation should be based on things that cause demand to move, NOT demand itself. In this instance, you should use sensitivity to discounts, marcomm stimulation, seasonality, competitive pressure, etc., rather than segmenting based on revenue or units (these are resulting variables, so the things you are trying to impact).

After segmenting, elasticity can be calculated, market basket penetration can be ascertained and marketing communication valuation (even media mix modelling) can be completed for each segment. (Imagine the insights!) Then a different demand model for each segment can be done.

Example: Churn modelling

First a little background, both on churn modelling and survival analysis. Churn (attrition) is a common metric in marketing analytics and there is usually a strategy about how to combat churn. The analytic technique is called survival modelling.

Say we have data from a telecom firm that wants to understand the causes of churn and strategies to slow down churn. The solution will be to first segment subscribers based on causes of churn and then do a different survival model for each segment. There should be a different strategy for each segment based on different sensitivities to each cause.

Survival modelling became popular in the early 1970s based on proportional hazards, called Cox regression, and in SAS is proc PHREG. That is a non-parametric approach, but the dependent variable is the hazard rate and is difficult to interpret and very difficult to explain to a client. Most marketing analysts use a parametric approach (in SAS proc LIFEREG). Lifereg has the dependent variable ln(time until the event) where the event is churn.

Survival modelling came out of biostatistics and has become very powerful in marketing. Survival modelling is a technique specifically designed to study time until event problems. In marketing, this often means ‘time until churn’, but can also be ‘time until response’, ‘time until purchase’, etc.

The power of survival modelling comes from two sources:

- A prediction of time until churn can be calculated for each subscriber

- Because it is a regression equation, there are independent variables that will tell how to increase/decrease the time until churn for every subscriber. This will develop personalized strategies for each subscriber.

So, Table 1 below shows a simple segmentation, with three segments. The mean values are shown (as KPIs) for each segment as a general profile. The segmenting variables are the discount amount, things that impact price (data, minutes, features, phones, etc.), IVR, dropped calls, income, size of household, etc. Note that percent of churn is NOT a segmenting variable.

TABLE 1

Segment 1

This is the largest segment at 48% subscribers but only brings in 7% of the revenue. They are either an opportunity or should be de-marketed. This segment has the shortest tenure, fewest features, most on a billing plan, fewest minutes, pays mostly by check rather than credit card, is not marketed to but responds the most. They seem to be looking for a deal. They use the most discounts (when they get them) lowest household income, are the youngest with the least education. They are probably sensitive to price, which causes them to churn - more so than the other segments at 44% after only 94.1 days.

Segment 2

29% of these subscribers bring in 30% of the revenue, so they do pretty much their fair share. They have the most phones and the largest households but get the most dropped calls. 39% churn, on average, 9 months after subscribing.

Segment 3

The smallest segment, at 22%, brings in a whopping 63% of the revenue. They are loyal and satisfied, buy the most features and keep coming back. They do not have many phones because they have the smallest households. They basically do not use IVR and only 22% are on a billing plan. They have the highest education and household income and are mostly middle-aged. They do not use much discount and pretty much ignore marcomms, even though they are sent most communications. It takes them over a year to attrite and only 21% do.

Interpretation and insights

It is the insights that come from the model output that drives the strategies (see Table 2 below). This shows on the left side the coefficients resulting from a churn model for each segment. (If the cell is blank it is because that variable for that segment model was insignificant).

TABLE 2

For example, take the independent variable number of features, (# features). This indicates how many features on the phone each subscriber has. To interpret the coefficient: e^B -1 * mean. That is, for segment 1, (((e^-0.055) – 1) * 94.1) = -5.04. This means that for every additional feature a subscriber in segment 1 has, their time until churn goes down (decreases) by 5.04 days. Or from 94.1 to 89.06.

For segment 3, (((e^0.057) – 1) * 388.2) = 22.77. This means, for every additional feature a subscriber in segment 3 has, their time until churn goes out (increases) by 22.77 days. Or from 388.2 to 410.97.

The insights from this one product variable might indicate segment 1 is sensitive to price and things that cause price (their bill) to increase. As price tends to increase, these subscribers tend to churn. Note that this segment has the smallest income and least education. Likewise, segment 3 appears to be brand loyal. As they get more features they tend to stay a subscriber for longer, but barely, by adding only 0.39 days. An ROI can be calculated using these insights.

Finally, let’s look at discounts, where a direct ROI can be calculated. If the firm gives an X % discount to subscribers in a segment that will result in a Y increase in TT churn. That increase in churn can be valued at the current bill rate.

For segment 1, which has an average discount rate of 12%, if that was increased to 13% the TT churn goes out by 34.46 days. Clearly, this segment is very sensitive to price. These would not be known unless a segmentation and churn model was implemented. Conversely, segment 3 is not sensitive to price and is very brand loyal. If the discount went from 2% to 3% the TT churn would only go out by 0.39 days. They will take the discount, but it is nearly irrelevant.

Note also that segment 2 seems more sensitive to dropped calls, compared to segment 1 and segment 3. This knowledge allows a strategy specifically aimed at segment 2.

What if there was no segmentation?

The above should help demonstrate how segmentation drives more insights for strategy and more accuracy for modelling, with better action-ability. Yet, what if only one model were developed overall, instead of by-segment?

Let’s look at the variable # features. One model will have a coefficient of -0.04. Note it is negative, on average, which means as a number of features increases, the time until churn decreases. Strategically this would indicate not upselling more feature to subscribers because time until churn is shorter. However, this is the wrong decision for segment 3, as with more features they remain happier and more loyal, and the best customers are those that stay on the database longer and keep paying. Therefore, using one model for the whole database would give the wrong indication on those that drive 63% of the revenue.

Another example is the number of emails sent. It is the same argument for segments 1 and 2: as more emails are sent, the TT churn goes out. But for segment 3, more emails cause emails fatigue, and the TT churn comes in. This is an important strategic insight: do NOT send more emails to the very loyal segment. They do not need them, so they become an irritation and tend to cause churn. Again, this insight would not be found without doing segmentation first.

In conclusion, segmentation should be seen as a strategic process, not an analytic one. Segmentation has uses other than merely to separate the market into homogeneous within, and heterogeneous between, classifications. Segmentation should be used to make predictive modelling more accurate and achieve more actionable strategic insights.